Innovations and Challenges for Cloud-based Video Production

The unprecedented environment during the COVID-19 crisis has caused Hollywood studios to shut down many of their planned productions. At the same time, remote production capabilities have ramped up in order to help creative talent to continue to develop and collaborate while quarantined at home. Even before the COVID-19 pandemic, cloud-based video production technology has enabled remote work for reviewing daily footage, performing editorial sessions, color grading, audio post production and VFX. However, there are several key issues which are preventing some types of production work from being done remotely. This article will go into detail about some of the innovations in response to traditional challenges that Qvest has observed at our clients and the wider industry. Please note that Qvest is a vendor-agnostic consultancy and does not resell vendor products or services.

Abstract

- In the past 5 years, cloud providers have developed innovative solutions to solve significant challenges that come with cloud-based video production. Advances in processing, networking, storage and security have enabled more and more of the video production workflow to move into the cloud.

- Recently, amplified by the global pandemic, Hollywood studios are turning to cloud-based technologies for remote production activities. Still, the overall production and distribution workflows continue to present major challenges and inherent inefficiencies due to legacy media technology.

- Traditionally, Hollywood creative talent has benefited from new technology innovations. The industry is on the precipice of significant disruption to legacy media workflows. To empower creative talent, studios must increase their ability to deliver new content faster and more efficiently than today.

In response to mass-market consumer demand, cloud providers have invested in elastic computing, storage and internet access. Film and TV production workflows are greatly benefiting from these latest revolutions in cloud-based technologies.

Background

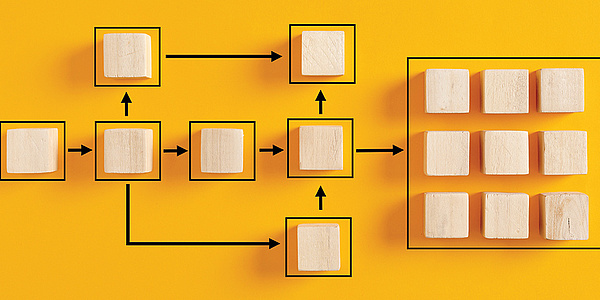

The majority of entertainment content is of the scripted, non-interactive variety. While different phases may occur during live events, video games, animation and other non-scripted entertainment, scripted content is generated through five major phases which have evolved over time. Figure 1 below describes these five phases and their core activities.

Today, the industry has been disrupted by new content owners which have raised demand for new, original content to historic levels. These demands have also put strains on the legacy video workflows due to the need to support a higher volume of production, VFX and post production resources, both technology and talent. COVID-19 has amplified the demand for direct to consumer content and also put additional strain on those same resources, which now must support remote access.

Innovation: Centralized Media Ingest to the Cloud

CHALLENGE As content is created, it moves between creative talent and other stakeholders, resulting in unnecessary duplication of media assets and increased risk of theft / piracy.

Ingest is the process of capturing, uploading and storing videos into a file system. Cloud based storage has matured to the point where multiple cloud vendors support ingesting HD quality video via direct upload over the internet. Once in the cloud, using workflow automation technology, media files can be secured, scanned by AI services, cataloged for search, transcoded and distributed as shown in Figure 2 below.

The primary benefit of ingesting all media assets into the cloud immediately upon creation is to create a “single source of truth” for a production. This enables the entire crew on the project to locate the latest version of an asset using self-service tools like online search. The primary roadblocks to centralized cloud ingest is a lack of support for capturing media files at their source and seamlessly synchronizing them with cloud storage. AI/ML can be leveraged to analyze and automate the often arduous process of tagging, grouping and resizing assets directly as soon as they’re loaded into the system making it so team members don’t have to spend countless hours manually organizing metadata and formatting assets. For example, through AI connected to your Digital Asset Management (DAM) system, you could add a photo of a product—say, a phone—and the AI engine can identify which brand and product it is, location, contextual metadata associated with background imagery and various other useful metadata values.

QTAKE is an ISV with innovative software and hardware modules which capture assets and metadata at source while on-set. QTAKE claims their product is a universal tool for camera monitoring, when combined with the right hardware. The core module is deployed on-set and runs within a powerful Mac workstation.

The core module integrates with professional quality video cards and supports advanced video codecs, which enables on-the-fly metadata capture and clip indexing at source. QTAKE automatically generates low resolution proxy videos which can be immediately viewed on-set using both workstations and tablets, hooking into color and VFX workflows. Getting these files to the cloud does require additional technology.

PixStor is on-set, high speed video storage which natively integrates with AWS storage when the PixStor Cloud option is purchased. PixStor Cloud extends the on-set storage appliance into AWS EBS (disk) and S3 (object) storage under a single file name space. The selective data movement feature provides intelligent file synchronization by only moving files when required and thereby minimizing costly data egress charges.

Once media in the cloud is finalized, it needs to be organized in a way that optimizes distribution. Companies like Netflix are innovating in content distribution by standardizing their digital masters library using a scalable format. Netflix chose to implement the Interoperable Master Format (IMF) packaging standard created by the Society of Motion Picture and Television Engineers (SMPTE). By using IMF, Netflix is able to hold a single set of core assets and unique elements needed to localize content. They report reductions in asset duplication by 95%. By packaging primary AV, supplemental AV, subtitles, non-English audio and other assets needed for global distribution into an “uber” master, Netflix avoids re-creating assets when servicing localized content.

The complete vision for cloud asset ingest accounts for all file types generated during a production, starting with the script. In order to fully realize the benefits of centralized cloud ingest, the studios, cloud providers and software vendors will need to continue to develop solutions together which securely create, encrypt, upload and store media assets over the internet.

Innovation: Cloud-based Real-time Collaboration

CHALLENGE The traditional production process is mostly linear, making creative iteration a timely and costly undertaking.

As demonstrated in Figure 1, video production is a complex process that encompasses multiple phases. Because the phases are isolated from each other, the definitive version of the content is produced late in the process. This means that between development and final editing, numerous iterations are spent visualizing the content according to the creative direction. When media is directly uploaded to the cloud, these downstream workflows can begin to run in parallel with support from real-time collaboration technologies, with some examples identified in Figure 3 below.

The vendor landscape of solutions for real-time collaboration software is highly fragmented and focused on business users. For video productions, Moxion is an ISV whose offers an “instant dailies” service which they called “immediates”. As opposed to traditional dailies, Moxion Immediates are available for review within seconds of upload from the camera. Moxion’s platform handles HD quality video and automatically generates a proxy video which gets shared with the members of the project; all occurring in near real-time. Within the platform, users can view the footage and collaborate together to share, comment, search and assemble content. To provide precise feedback, annotation tools are available to mark up footage on both desktop and mobile devices.

Historically, creative talent has needed to live in cities close to productions and their media files. With cloud-native storage and virtual workstation streaming, this work can take place almost anywhere in the world. This opens up opportunities for talent currently in inaccessible markets, or creation of new jobs for extremely specialized tasks which can be performed by a new pool of candidates. As media applications integrate natively with cloud-based storage it greatly expands the reach of creative tools for productions. Teradici is a company which has been helping M&E companies deploy secure virtualized workstations using their PCoIP solutions. Teradici explains PCoIP as a multi-codec remote display protocol that allows users to be able to have high accuracy in color and visualization displayed from the data center to an end point of their choosing. Once deployed on AWS, Azure or GCP, Teradici pairs with the Nvidia RTX server and Quadro graphics cards to provide a remote virtual workstation that securely accesses cloud storage. In order to optimize for a variety of bandwidth situations, Teradici has developed algorithms which dynamically tune the remote display experience up and down, based on the integrity of the connection.

Studios which have begun to use cloud asset ingest and remote virtual creative workstations are reporting an increase in the confidence with their data security. With a single source of truth for media assets, workflows which rely on external vendors no longer require those vendors to maintain copies of the files. Granting and revoking access to assets can be done online quickly and with instant enforcement. Maintaining version control and eliminating “shadow” repositories of media assets continue to be a challenge, despite these advancements. However, as studios gain trust in these new technologies, production budgets for on-site storage and equipment will shift to cloud and internet providers. It is the hope that these changes will lower the barrier to entry for new vendors to increase competition and improve end user experience.

Conclusion

As cloud-based video production becomes the norm, content owners will have the ability to develop and produce more titles with more predictability across production operations. Early renderings viewable as cloud immediates can help to speed critical decision making about budgets, marketing and creative intent. Further downstream, synergistic benefits can be realized by teams working on large franchises as they simultaneously develop assets that have been shared by the production. As technology vendors work together to standardize software integrations and security protocols, other participants in the industry will need to work together to develop cloud-native tools, services and workflows that address the challenges that remain for further standardization and user adoption.

To achieve this, participants must set in motion a clearly defined plan looking at where the industry needs to be in the upcoming years and a coordinated approach for research, learnings and proposals which can be shared with the community for refinement. A conservative timeline for these changes is most likely to be a ten year period. This will evolve over time through the course of engagement between stakeholders. Most importantly, innovations will have to continue to be produced by the many companies who are involved throughout the different phases and processes.